Introduction

A Walk is the thesis film for my M.F.A. degree at SCAD. It is a 3D Animated film. I am responsible for all visual parts of this project. Shiwen Zhu is the sound designer.

For this project, I want to replicate this kind of dreamy experience. This short film will be constructed with elements that we are familiar with in real life, but those elements disobey the rules in reality.

Index

Pre-production

Production

Storyboard

Concepts

Character A - The Dictator

One of the main characters is The Dictator from South America in the 1500s. He managed to sustain his life in multiple ways. In 1582, he used dark magic to revive after being killed in the Columbia war. In 1912, he got sick in the big pandemic, then was cured by the future medical technology. No one knows how he obtained the access of that technology. His third life extension is to use a prosthetic head to replace his decaying original head.

He has a huge belly because of the unhealthy lifestyle. Also, his exaggerated disproportional body type is based on my research of Alice in Wonderland. Such body type can make the character feel more unreal.

His skin is pale like a zombie’s. His clothes are old and dusty. His weapon is an old sword that’s been with him for 500 years since when he was in Colombia. The sword was reformed into a laser to match modern technology.

Character B - The Creature

The other character is a mysterious creature from an unknow planet. He is the servant of the higher being. This creature maintains the order of life and death for the normal human beings. He is not a demon in any religious sense. He travels the universe and has been through lots of wars and fights.

He is muscular. He does not have a face. There are wounds all over his body. The Creature can walk throughout the universe without any sort of protection.

Pipeline

The digital sculpting part is done in Zbrush; modeling, rigging and character animation are produced using Maya. The technical animation is done in Houdini and exported back to Maya as alembics. Then I use Maya for lighting and Nuke for compositing.

Modeling

Character A - The Dictator

This character has a normal human face but abnormal body type. So I choose to model his face based on human anatomy, with normal skeleton and muscle structure. But I model his body in a very stylized way.

As the production progresses, I scale down his belly to make it easier to animate him.

Character B - The Creature

The Creature has a very muscular body type but stylized human face. So for him, I model his body according to human muscle structure but model his face in a very stylized way.

Props

For the whole environment, since I have a shot with the camera going into the mirror, I have the mirror world actually built in Maya.

This is the outdoor environment.

Rigging &Cloth Sim

Rig Breakdown

The dictator rig is separated into an animation rig and a dynamic rig. The animation rig is for character animation. The dynamic rig is for cloth simulation. The dynamic rig is integrated into the animation rig as a switch. Users can turn on/off the dynamic rig as they want.

The animation rig is done with the auto rigger that I scripted myself. The rig also has a fully functional facial rig that is capable of doing lip sync and cartoony deformation.

First, I do the character animation on the animation rig. Then I turn on the dynamic switch to do the cloth simulation on the single-layered sim cage. The final render geometry is bound to the cage with a wrap deformer.

Mirror Rig

Since the film has a shot that moves from one side of the mirror to the other side, the character needs an actual mirror geometry instead of a reflection in the mirror. In order to set it up, I have a corresponding duplicate for each geometry. Then, I use a blendshape deformer from the original to the duplicate, then scaling the duplicates in X direction.

Character Animation

Since the schedule is tight, I coded some tools to speed up my production. All the tools are scripted in python.

•Picker. The tool that helps to select a set of rig controls. For example, I can choose face controls/arm controls/leg controls, etc. all together.

•FKIK seamless switch. The tool that helps to switch between FK and IK seamlessly. The FK/IK will automatically match to the other by running this script.

•Space Switch. The tool that helps to switch from one dynamic parent to another.

Lighting

Shader Breakdown

For the film, I chose a non-realistic rendering style. I think that will be able to demonstrate the beauty of animation. I choose Arnold to render my film. The basic shader setup is to use an AiFlat shader multiply an AiToon shader. Each geometry might have a small variation on the shader.

The Aiflat shader renders the texture image without any shading information. It can grab the texture color very fast. The AiToon shader is used to get the toon map. On the map I use no interpolation so that it is able to render geometry parts of different shades without any blending. In this way, the image can look toony.

Toon Map

multiply

Lighted Texture (AiToon)

Fog

In the film, I utilize lots of fog to create the dreamy effects. In the shot 12, The Dictator runs away and disappears into the fog. In order to create that effect, I put multiple area lights to create multiple layers of fogs, so that the character can get less visible as he runs farther away.

Effects

Fire

The smoke and fire effects in the film are done in Houdini. Then, the rendered fire and smoke are composited with Maya rendered images to create the final result. The fire and smoke are simulated separately in Houdini Pyro solver.

Simulated Fire in Houdini viewport

For the fire, I use some volume mix tricks to create the blue wrap and transparent sphere in the fire.

First, the pyro solver generates 3 different fields: temperature, burn, and heat. I export them using the dopio node separately. As the node graph shows below, I preserve one single field in each delete node.

Then, I subtract the burn field from the heat field using the volumemix node.

heat field

Burn field

In order to make the fire look better, I scale down the burn field with a transform node.

Finally, to make the fire looks right, I give the lower temperature a blue color in the shader.

Cigar Tip

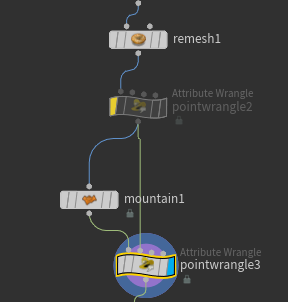

The cigar tip is also done in Houdini. First, I export the animated cigar geometry as an alembic from Maya to Houdini. Then, I delete all the geometry other than the tip, then remeshing it and mountaining it. For the resulted geometry, I separate them as two different groups: front and back according to the P.z attribute of each point. This process is finished with vex code.

Then, I color the two different groups separately, with a bit noise on colors. Finally, I use vex code to animate the overall color in the front.

Laser Gun

Generating Geometry Setup

The laser gun effect is built in Houdini as well. The effect is simulated using the particle system (dop network) in Maya. The simulation contains 4 separate parts: laser beam, laser ball and sparkles. They are simulated in different dop networks.

The positions of those 2 spheres are used to determine the initial velocity direction and the position of the geometry that generating the particles. The actual vector is calculated in a wrangle node.

Cd Attribute that determines birth rate depends on the distance from the center. The further from the center, the denser particles are. The distance is calculated in a wrangle node.

Laser Beam Setup

The laser beam is set up by shooting particles using the sphere before. The attribute V is pre-determined. The radius varies in different positions by animating the radius of the sphere.

I also added noise to Cd attribute that determines the birth rate.

The beam color is also setup by using the distance gradient. The color in the middle is brighter than the particle color in the surrounding area.

Sparkles set up

The sparkle particles are generated by the same geometry as the beam particles. The particles are generated only at one frame. The initial speed is determined by initial speed, normal vector, and noise. The final result is obtained by adjusting the ratio of those 3 vectors.

Then, I copystamp cylinders to the simulated points. I added variation on their scale, height and color. The closer the particle is to death, the darker the color is. Also there is some noise based on it.

Lightning

Basic lightning curve trails setup

The trails are created by connecting two points with curves and adding noise to those curves.

The points are created by scattering 2 different sets of points on the object that is going to be surrounded by the lightings.

Then I connected them one to one and resampled the connected curves.

The next step is to use the Ray node to make the curves stay above the object surface. In order to do that, the ray direction needs to be pre-determined. The direction is the vector pointing from the center of the gun to each point. This vector is assigned as normal to the corresponding point.

Then I separate those curves into 3 different groups. Each group of curves has a different distance away from the surface. Also, the first and the last points on each curve is on the surface, not above the surface.

The final step to create trails is to add noise to each curve. I did that by using a vop node. The farther that the points are away from the surface, the noise is stronger.

Move lightnings setup

The moving lightnings setup is mostly done by using wrangle nodes.

The wrangle nodes on the left side are looping through each curve (in my case, 120 curves). I create 120 points on the light-yellow wrangle node with attributes for each curve.

The node on the right is looping through all the points. It is mostly calculating the position of each curve and which cycle it is on. If a point’s calculated lifespan is greater than 1, that moving point is deleted from the curve. If it is less than 1, it goes where the calculated position is. If the points with a life greater than 1 are not deleted, those points are going back to the beginning of the trail and creating a long straight line connecting the curve in a cycle.

Before deletion

After deletion

In the end, I add colors and scales to the lightning curves. The closer those points to the surface, the more red, and the bigger they are.

Flower Growing

One of the most important visual elements in my film is the growing flower at the end. At the end of the film, a street grows up and finally forms a flower. I try different ways to make that happen.

1.Using Individual Brick Geometries (Failed)

The first method is to use individual small brick geometries to form the final large-scale street. The method did not work well because the bricks look too uniform. In addition, the UV map cannot be mapped in three dimensions procedurally. So it is very hard to use texture maps on the model. Since the geometry and the color both look uniform, the result looks unnatural.

2.Using Whole Pieces of Geometries (Successful)

The second method is to use whole pieces of geometries to form the flower. The flower is growing procedurally by adding length of extension on the original geometry. Since UV can be used with this method, textures are also applied to make it look more natural.

In addition, irregularities are added to the base geometry as well to make the street look more natural.

3.Fog

In order to make the flower growing scene match the style of previous shots, fog is added to the scene. In addition, a moon is added to the environment as well. Those consistent environment visual elements make the film look consistent from the beginning to the end.

Flower Demolishing

The demolishing process looks like a flower withering. To accomplish that, I make the demolishing start from the petal edges to the inner parts of the petals. Also, the fractured pieces on the edges are smaller than the inner parts.

1. Fracturing

In order to make the edge pieces smaller than inner pieces, it is necessary to have more points around the edge than the inner parts. To do that, I gave points on each original petal geometry an attribute called ‘xIndex”. The closer a point is to the petal top, the higher its index is.

xIndex visualization

Second, I separate points into 3 different groups according to their xIndex value. For each group, I scatter points with different densities. I gave the points closer to the bottom lower density, and I gave points closer to the top higher density.

group node network

sparse points

dense points

vex code

1. Adding constraints

The effect that I am going for is that the smaller pieces on the edge fall first. Then the inner parts shatter. Such effect can look like the flower withers. To achieve that, I input multiple constraints into the RBD solver and give them different strengths. The constraints for the edge petals are weaker, and the constraints for the inner petals are stronger.

First, I transfer the attribute “xIndex” from the original geometry to the constraint geometry. Then, I modify the “strength” attribute according to the “xIndex” attribute. Then I remap strength as needed.

Then I separate points on the constraints into different groups according to their strength value, with some randomness added. First, I put points with strength > 0.7 into a group and the rest into another. Second, I put points with strength < 0.7 into 4 different groups randomly.

Similar to the trick that I used before, I group those points according to the attributes created. Then I promote those groups from points to the prims. Then I separate them as different geometries to input to the RBD solver.

Separate Constraint Groups

Rbd input constraints

For each constraint node, I give it a different break frame and strength value. So that geometries constrained by different geometries can break at different time.

3. Debris

For the debris, I only wanted the geometries that already breaks from the original geometry to generate debris. Also, the amount of debris on each piece should increase then decrease as it falls off. To do that, I use vex code to control the brith rate.

First, I give each point an attribute recording its rest position at P.y. Then I calculate the birth rate according to their displacement from the rest position.

4. Ash

For the ash, it is more straightforward. I generate volume from the debris points. Since ash is mostly falling, the 10 for voxel size 10 is small enough. The shape modification on the pyro solver is only dissipation and a little disturbance. Since the ash is falling, I give them a gravity.